在使用selenium时,从SEC页面中抓取结果(链接、日期)有困难

提问于 2022-10-01 12:19:03

我想复制与收益/其他报告(8k,10q)相关的特定股票的链接和日期。我已经尝试过遍历结果表类,但是在使用selenium时会出现超时错误。任何帮助都会大大的appreciated.Thank你!

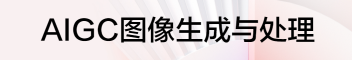

网页:

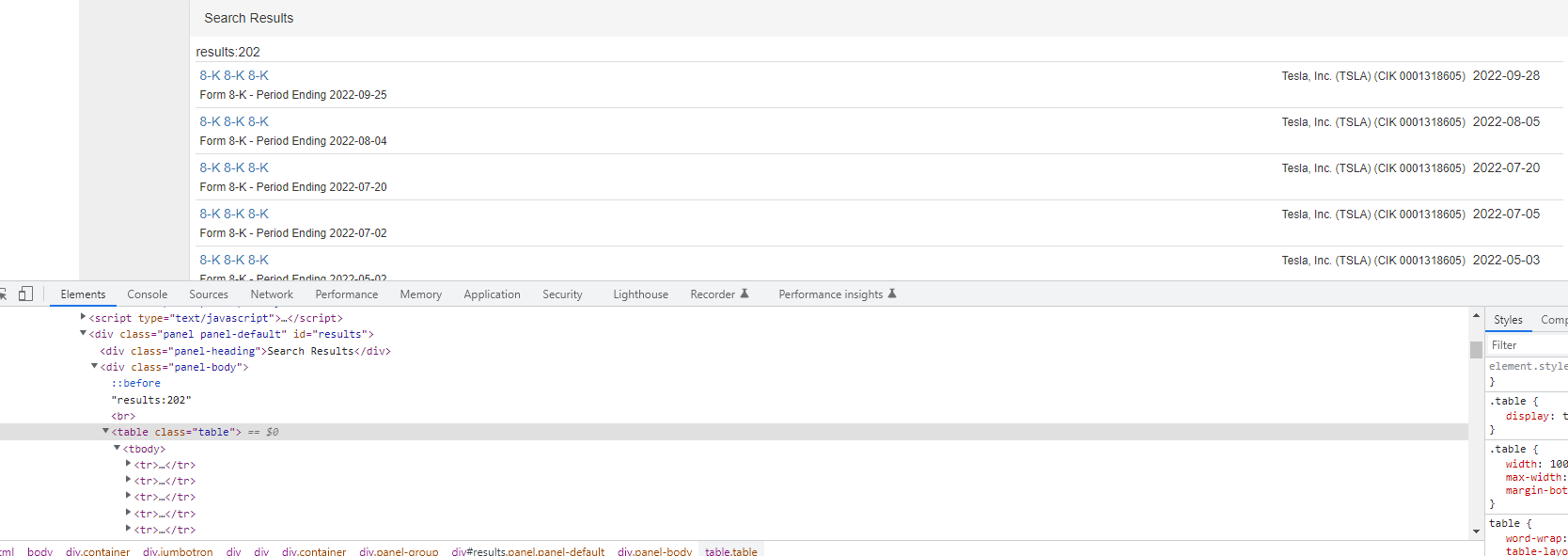

方法1)

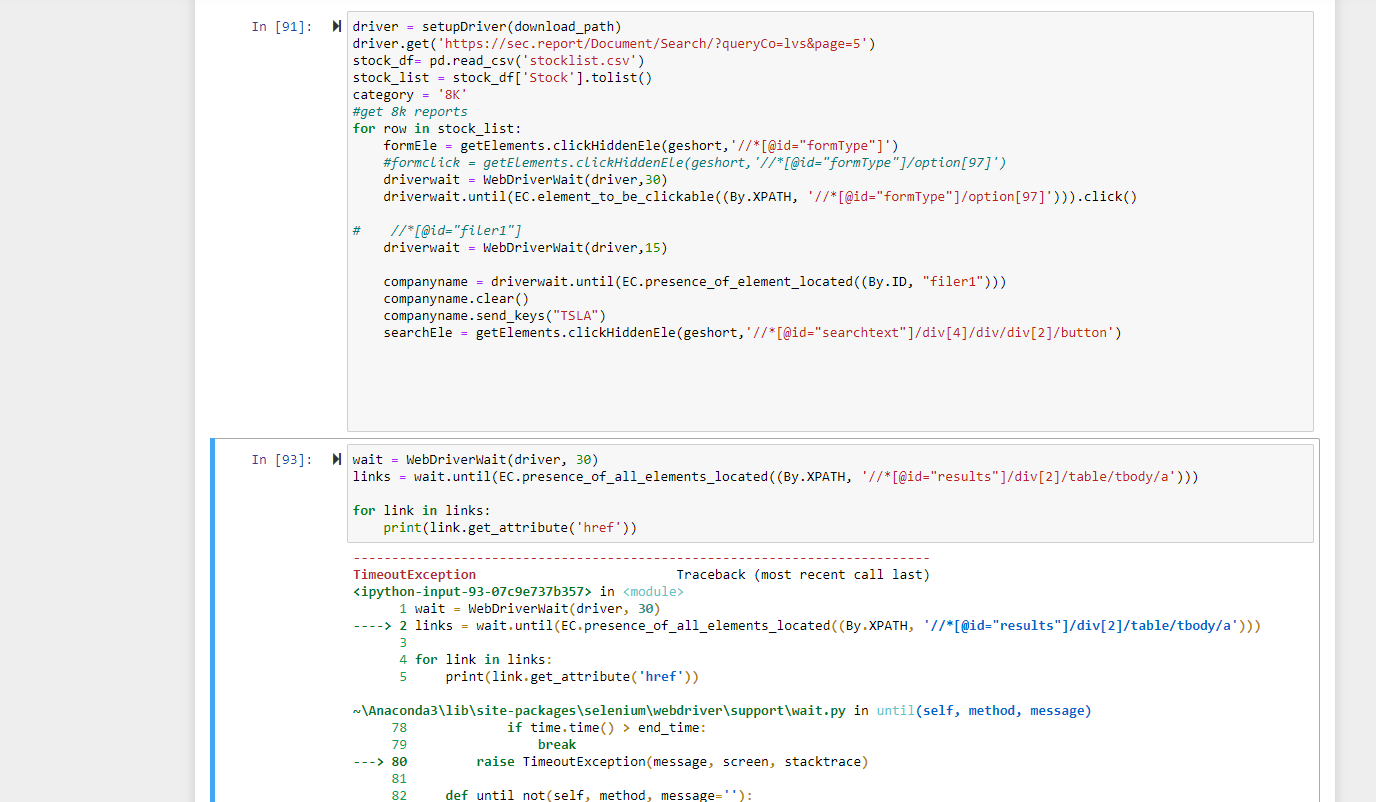

方法2)厌倦循环遍历表的所有单独结果xpath:

#接近1

driver = setupDriver(download_path)

driver.get('https://sec.report/Document/Search/?queryCo=lvs&page=5')

formEle = getElements.clickHiddenEle(geshort,'//*[@id="formType"]')

#formclick = getElements.clickHiddenEle(geshort,'//*[@id="formType"]/option[97]')

driverwait = WebDriverWait(driver,30)

driverwait.until(EC.element_to_be_clickable((By.XPATH, '//*[@id="formType"]/option[97]'))).click()

# //*[@id="filer1"]

driverwait = WebDriverWait(driver,15)

companyname = driverwait.until(EC.presence_of_element_located((By.ID, "filer1")))

companyname.clear()

companyname.send_keys("TSLA")

searchEle = getElements.clickHiddenEle(geshort,'//*[@id="searchtext"]/div[4]/div/div[2]/button')

wait = WebDriverWait(driver, 30)

links = wait.until(EC.presence_of_all_elements_located((By.XPATH, '//*[@id="results"]/div[2]/table/tbody/a')))

for link in links:

print(link.get_attribute('href'))#方法2代码

driver = setupDriver(download_path)

driver.get('https://sec.report/Document/Search/?queryCo=lvs&page=5')

formEle = getElements.clickHiddenEle(geshort,'//*[@id="formType"]')

#formclick = getElements.clickHiddenEle(geshort,'//*[@id="formType"]/option[97]')

driverwait = WebDriverWait(driver,30)

driverwait.until(EC.element_to_be_clickable((By.XPATH, '//*[@id="formType"]/option[97]'))).click()

# //*[@id="filer1"]

driverwait = WebDriverWait(driver,15)

companyname = driverwait.until(EC.presence_of_element_located((By.ID, "filer1")))

companyname.clear()

companyname.send_keys("TSLA")

searchEle = getElements.clickHiddenEle(geshort,'//*[@id="searchtext"]/div[4]/div/div[2]/button')

href = []

for i in range(0,200):

wait = WebDriverWait(driver, 40)

wait.until(EC.presence_of_all_elements_located((By.XPATH, '//*[@id="results"]/div[2]/table/tbody/tr[6]/td['+str(i)+']/div[1]')))

headings = driver.find_elements_by_xpath('//*[@id="results"]/div[2]/table/tbody/tr['+str(i)+']/td[1]/div[1]')

link = heading.find_element_by_tag_name("a")

x = link.get_attribute("href")

href.append(x)

print(href)回答 1

Stack Overflow用户

回答已采纳

发布于 2022-10-01 14:13:55

希望OP的下一个问题将包含代码(而不是图像)和一个最小的可复制示例,下面是解决他的难题的一个解决方案。正如评论中指出的那样,Selenium应该是网络抓取的最后手段--它是一种测试工具,而不是web抓取工具。以下解决方案将从有关LVS的11页数据中提取日期、表单名称、表单描述和表单urls:

from bs4 import BeautifulSoup as bs

import requests

from tqdm import tqdm

import pandas as pd

headers = {'User-Agent': 'Sample Company Name AdminContact@<sample company domain>.com'}

s = requests.Session()

s.headers.update(headers)

big_list = []

for x in tqdm(range(1, 12)):

r = s.get(f'https://sec.report/Document/Search/?queryCo=lvs&page={x}')

soup = bs(r.text, 'html.parser')

data_rows = soup.select('table.table tr')

for row in data_rows:

form_title = row.select('a')[0].get_text(strip=True)

form_url = row.select('a')[0].get('href')

form_desc = row.select('small')[0].get_text(strip=True)

form_date = row.select('td')[-1].get_text(strip=True)

big_list.append((form_date, form_title, form_desc, form_url))

df = pd.DataFrame(big_list, columns = ['Date', 'Title', 'Description', 'Url'])

print(df)结果:

Date Title Description Url

0 2022-09-14 8-K 8-K 8-K Form 8-K - Period Ending 2022-09-14 https://sec.report/Document/0001300514-22-000101/#lvs-20220914.htm

1 2022-08-29 40-APP/A 40-APP/A 40-APP/A Form 40-APP https://sec.report/Document/0001193125-22-231550/#d351601d40appa.htm

2 2022-07-22 10-Q 10-Q 10-Q Form 10-Q - Period Ending 2022-06-30 https://sec.report/Document/0001300514-22-000094/#lvs-20220630.htm

3 2022-07-20 8-K 8-K 8-K Form 8-K - Period Ending 2022-07-20 https://sec.report/Document/0001300514-22-000088/#lvs-20220720.htm

4 2022-07-11 8-K 8-K 8-K Form 8-K - Period Ending 2022-07-11 https://sec.report/Document/0001300514-22-000084/#lvs-20220711.htm

... ... ... ... ...

1076 2004-11-22 S-1/A S-1/A S-1/A Form S-1 https://sec.report/Document/0001047469-04-034893/#a2143958zs-1a.htm

1077 2004-10-25 S-1/A S-1/A S-1/A Form S-1 https://sec.report/Document/0001047469-04-031910/#a2143958zs-1a.htm

1078 2004-10-20 8-K 8-K 8-K Form 8-K - Period Ending 2004-09-30 https://sec.report/Document/0001047469-04-031637/#a2145253z8-k.htm

1079 2004-10-08 UPLOAD LETTER Form UPLOAD https://sec.report/Document/0000000000-04-032407/#filename1.txt

1080 2004-09-03 S-1 S-1 S-1 Form S-1 https://sec.report/Document/0001047469-04-028031/#a2142433zs-1.htm

1081 rows × 4 columns你可以提取其他的东西,如公司名称和人名(我想那将是提交表格的人)。

BeautifulSoup:https://beautiful-soup-4.readthedocs.io/en/latest/index.html的文档,请求文档: TQDM的https://requests.readthedocs.io/en/latest/,访问https://pypi.org/project/tqdm/和大熊猫:https://pandas.pydata.org/pandas-docs/stable/index.html

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/73921477

复制相关文章

点击加载更多