进击消息中间件系列(九):Kafka 各类 API 使用

进击消息中间件系列(九):Kafka 各类 API 使用

民工哥

发布于 2023-08-22 14:17:09

发布于 2023-08-22 14:17:09

Producer API

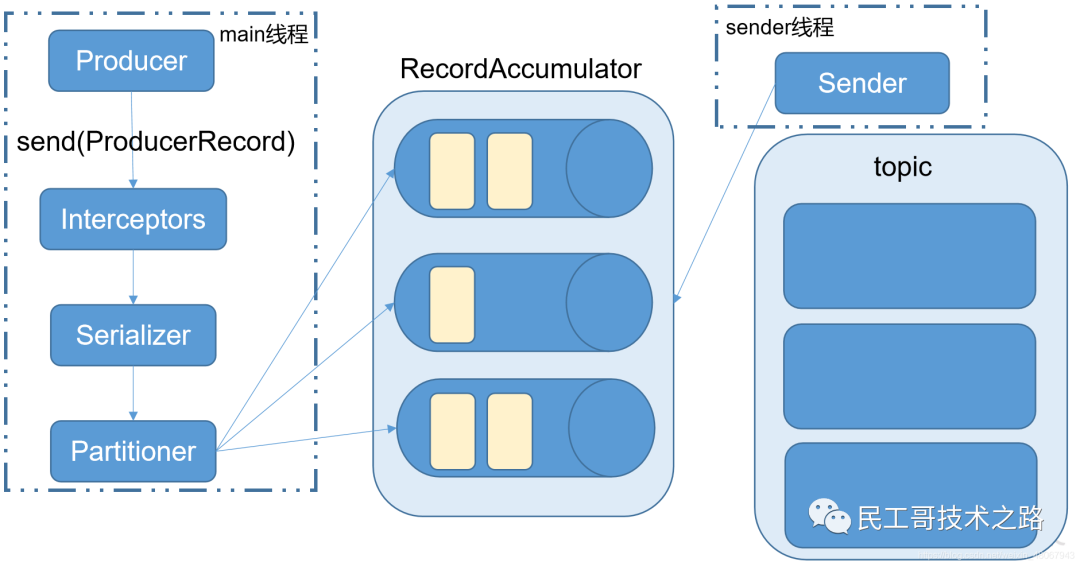

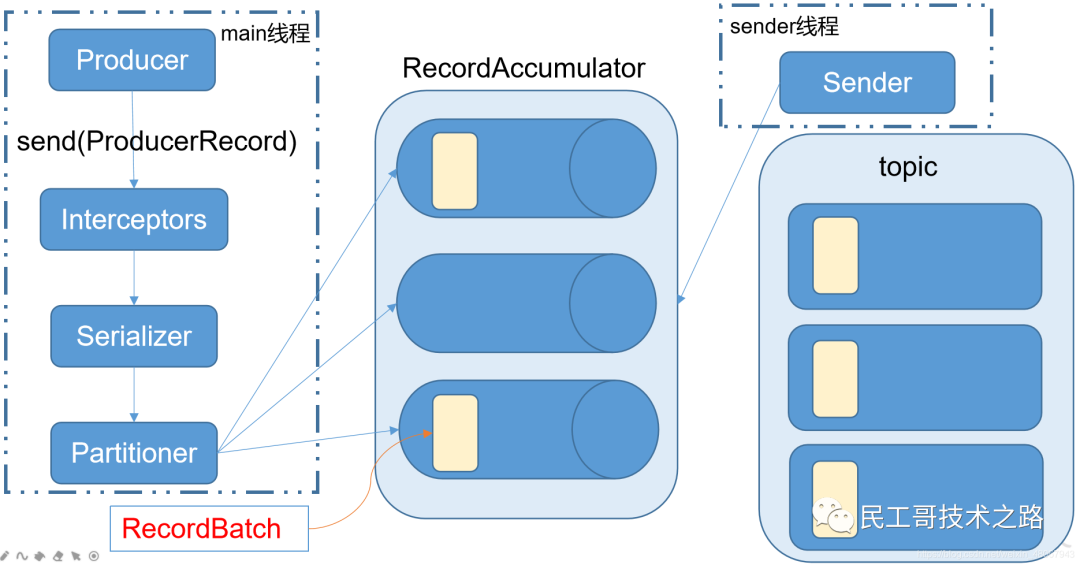

消息发送流程

- Kafka 的 Producer 发送消息采用的是异步发送的方式。在消息发送的过程中,涉及到了两个线程——main线程和Sender线程,以及一个线程共享变量——RecordAccumulator。main 线程将消息发送给 RecordAccumulator,Sender 线程不断从 RecordAccumulator 中拉取消息发送到 Kafka broker。

顺序:监控器 —> 序列化器 —> 分区器

相关参数说明

batch.size #只有数据积累到 batch.size 之后,sender 才会发送数据。

linger.ms #如果数据迟迟未达到 batch.size,sender 等待 linger.time 之后就会发送数据。

异步发送 API

导入依赖

<dependencies>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.4.1</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.12.0</version>

</dependency>

</dependencies>

添加log4j2配置文件(用于打印日志)

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="error" strict="true" name="XMLConfig">

<Appenders>

<!-- 类型名为Console,名称为必须属性 -->

<Appender type="Console" name="STDOUT">

<!-- 布局为PatternLayout的方式,

输出样式为[INFO] [2018-01-22 17:34:01][org.test.Console]I'm here -->

<Layout type="PatternLayout"

pattern="[%p] [%d{yyyy-MM-dd HH:mm:ss}][%c{10}]%m%n" />

</Appender>

</Appenders>

<Loggers>

<!-- 可加性为false -->

<Logger name="test" level="info" additivity="false">

<AppenderRef ref="STDOUT" />

</Logger>

<!-- root loggerConfig设置 -->

<Root level="info">

<AppenderRef ref="STDOUT" />

</Root>

</Loggers>

</Configuration>

编写代码

需要用到的类:

KafkaProducer #需要创建一个生产者对象,用来发送数据

ProducerConfig #获取所需的一系列配置参数

ProducerRecord #每条数据都要封装成一个ProducerRecord对象

- kafka生产者的api最简单版本

public class MyProducer{

public static void main(String[] args) {

Properties properties = new Properties();

properties.put("bootstrap.servers","hadoop105:9092");

properties.put("key.serializer","org.apache.kafka.common.serialization.StringSerializer");

properties.put("value.serializer","org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> producer = new KafkaProducer<String,String>(properties);

for (int i = 0; i < 10; i++) {

producer.send(new ProducerRecord<String, String>("bajie", "--gaolaozhuangshuiyundong--" + i));

}

producer.close();

}

}

[xiaoxq@hadoop105 kafka_2.11-2.4.1]$ bin/kafka-console-consumer.sh --bootstrap-server hadoop105:9092 --topic bajie

--gaolaozhuangshuiyundong--0

--gaolaozhuangshuiyundong--1

--gaolaozhuangshuiyundong--2

--gaolaozhuangshuiyundong--3

--gaolaozhuangshuiyundong--4

--gaolaozhuangshuiyundong--5

--gaolaozhuangshuiyundong--6

--gaolaozhuangshuiyundong--7

--gaolaozhuangshuiyundong--8

--gaolaozhuangshuiyundong--9

不带回调函数的API

public class MyProducer2 {

public static void main(String[] args) {

//todo 创建生产者的配置对象

Properties properties = new Properties();

//1.设定集群

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"hadoop105:9092");

//2.设定ack等级

properties.put(ProducerConfig.ACKS_CONFIG,"all");

//3.设定重试的次数

properties.put(ProducerConfig.RETRIES_CONFIG,"2");

//4.设定batch的大小

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

//5.设定间隔时间

properties.put(ProducerConfig.LINGER_MS_CONFIG,1);

//6.设定缓冲区大小

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

//7.设定key和value的序列化方式

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

//todo 创建生产者的对象

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

//发送数据

for (int i = 0; i < 10; i++) {

producer.send(new ProducerRecord<String, String>("bajie","gaolaozhuang---" + i));

}

//TODO 关闭生产者对象

producer.close();

}

}

[xiaoxq@hadoop105 kafka_2.11-2.4.1]$ bin/kafka-console-consumer.sh --bootstrap-server hadoop105:9092 --topic bajie

gaolaozhuang---0

gaolaozhuang---1

gaolaozhuang---2

gaolaozhuang---3

gaolaozhuang---4

gaolaozhuang---5

gaolaozhuang---6

gaolaozhuang---7

gaolaozhuang---8

gaolaozhuang---9

带回调函数的API

- 回调函数会在 producer 收到 ack 时调用,为异步调用,该方法有两个参数,分别是RecordMetadata和Exception,如果Exception为null,说明消息发送成功,如果Exception不为null,说明消息发送失败。

- 注意:消息发送失败会自动重试,不需要我们在回调函数中手动重试。

public class MyProducer3 {

public static void main(String[] args) {

//todo 创建生产者的配置对象

Properties properties = new Properties();

//1.设定集群,broker-list

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"hadoop105:9092");

//2.设定ack等级

properties.put(ProducerConfig.ACKS_CONFIG,"all");

//3.设定重试的次数

properties.put(ProducerConfig.RETRIES_CONFIG,"2");

//4.设定batch的大小 16kb

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

//5.设定间隔时间 1ms

properties.put(ProducerConfig.LINGER_MS_CONFIG,1);

//6.设定缓冲区大小 32M

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

//7.设定key和value的序列化方式

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

//todo 创建生产者的对象

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

//发送数据

for (int i = 0; i < 10; i++) {

//采用生产者对象发送数据

producer.send(new ProducerRecord<String, String>("bajie","er shi xiong shi fu bei yao guai zhua zou le---" + i),new Callback() {

@Override

//这个回调函数 在ack正常返回的时候是返回metadata,如果不正常返回则返回异常

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e==null){

System.out.println("topic:" + recordMetadata.topic()

+ "--partition:" + recordMetadata.partition()

+ "--offset:" + recordMetadata.offset() );

}

}

});

}

//TODO 关闭生产者对象

//这个close会在关闭前将所有数据处理完再退出生产者.

producer.close();

}

}

控制台显示

topic:bajie--partition:1--offset:10

topic:bajie--partition:1--offset:11

topic:bajie--partition:1--offset:12

topic:bajie--partition:1--offset:13

topic:bajie--partition:1--offset:14

topic:bajie--partition:1--offset:15

topic:bajie--partition:1--offset:16

topic:bajie--partition:1--offset:17

topic:bajie--partition:1--offset:18

topic:bajie--partition:1--offset:19

主题中添加的内容

er shi xiong shi fu bei yao guai zhua zou le---0

er shi xiong shi fu bei yao guai zhua zou le---1

er shi xiong shi fu bei yao guai zhua zou le---2

er shi xiong shi fu bei yao guai zhua zou le---3

er shi xiong shi fu bei yao guai zhua zou le---4

er shi xiong shi fu bei yao guai zhua zou le---5

er shi xiong shi fu bei yao guai zhua zou le---6

er shi xiong shi fu bei yao guai zhua zou le---7

er shi xiong shi fu bei yao guai zhua zou le---8

er shi xiong shi fu bei yao guai zhua zou le---9

分区器

默认的分区器 DefaultPartitioner

interceptors拦截器过滤 —–> serializer序列化器进行序列化方便数据的传输 —–> partitions分区

DefaultPartitioner分区实现:

当指定了key,则采用固定的算法,每次都会计算出同一分区号,如果key不存在则计算出一个可用分区的的一个任意分区。

自定义分区器

public class MyPartitioner implements Partitioner {

@Override

/**

* 主要写分区逻辑

* 有bajie 的就往0号分区

* 没有bajie 的就往1号分区

*/

public int partition(String s, Object o, byte[] bytes, Object o1, byte[] bytes1, Cluster cluster) {

if (new String(bytes1).contains("bajie")){

return 0;

}else {

return 1;

}

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> map) {

}

}

编写partitionerProducer

public class PartitionerProducer {

public static void main(String[] args) {

//todo 创建生产者的配置对象

Properties properties = new Properties();

//1.设定集群,broker-list

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"hadoop105:9092");

//2.设定ack等级

properties.put(ProducerConfig.ACKS_CONFIG,"all");

//3.设定重试的次数

properties.put(ProducerConfig.RETRIES_CONFIG,"2");

//4.设定batch的大小 16kb

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

//5.设定间隔时间 1ms

properties.put(ProducerConfig.LINGER_MS_CONFIG,1);

//6.设定缓冲区大小 32M

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

//7.设定分区器

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,"partitioner.MyPartitioner");

//8.设定key和value的序列化方式

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

//todo 创建生产者的对象

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

//发送数据

for (int i = 0; i < 10; i++) {

//采用生产者对象发送数据

if (i<5){

producer.send(new ProducerRecord<String, String>("bajie","er shi xiong shi bajie ---" + i),new Callback() {

@Override

//这个回调函数 在ack正常返回的时候是返回 metadata,如果不正常返回则返回异常

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e==null){

System.out.println("topic:" + recordMetadata.topic()

+ "--partition:" + recordMetadata.partition()

+ "--offset:" + recordMetadata.offset() );

}

}

});

}else{

producer.send(new ProducerRecord<String, String>("bajie","da shi xiong shi wu kong ---" + i),new Callback() {

@Override

//这个回调函数 在ack正常返回的时候是返回 metadata,如果不正常返回则返回异常

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e==null){

System.out.println("topic:" + recordMetadata.topic()

+ "--partition:" + recordMetadata.partition()

+ "--offset:" + recordMetadata.offset() );

}

}

});

}

}

//TODO 关闭生产者对象

//这个close会在关闭前将所有数据处理完再退出生产者.

producer.close();

}

}

执行后结果为

topic:bajie--partition:1--offset:25

topic:bajie--partition:1--offset:26

topic:bajie--partition:1--offset:27

topic:bajie--partition:1--offset:28

topic:bajie--partition:1--offset:29

topic:bajie--partition:0--offset:14

topic:bajie--partition:0--offset:15

topic:bajie--partition:0--offset:16

topic:bajie--partition:0--offset:17

topic:bajie--partition:0--offset:18

同步发送 API

- 同步发送的意思就是,一条消息发送之后,会阻塞当前线程,直至返回ack。

- 由于 send 方法返回的是一个 Future 对象,根据 Futrue 对象的特点,我们也可以实现同步发送的效果,只需在调用 Future 对象的 get 方法即可。

编写SyncProducer

public class SyncProducer {

public static void main(String[] args) throws ExecutionException, InterruptedException {

//todo 创建生产者的配置对象

Properties properties = new Properties();

//1.设定集群,broker-list

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"hadoop105:9092");

//2.设定ack等级

properties.put(ProducerConfig.ACKS_CONFIG,"all");

//3.设定重试的次数

properties.put(ProducerConfig.RETRIES_CONFIG,"2");

//4.设定batch的大小 16kb

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

//5.设定间隔时间 1ms

properties.put(ProducerConfig.LINGER_MS_CONFIG,1);

//6.设定缓冲区大小 32M

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

//7.设定key和value的序列化方式

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

//todo 创建生产者的对象

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

//发送数据

for (int i = 0; i < 10; i++) {

//采用生产者对象发送数据

Future<RecordMetadata> future = producer.send(new ProducerRecord<String, String>("bajie", "er shi xiong shi bajie ---" + i), new Callback() {

@Override

//这个回调函数 在ack正常返回的时候是返回 metadata,如果不正常返回则返回异常

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e == null) {

System.out.println("topic:" + recordMetadata.topic()

+ "--partition:" + recordMetadata.partition()

+ "--offset:" + recordMetadata.offset());

}

}

});

//System.out.println("消息已经发送了");

future.get();

}

//TODO 关闭生产者对象

//这个close会在关闭前将所有数据处理完再退出生产者.

producer.close();

}

}

执行后结果

opic:bajie--partition:0--offset:34

topic:bajie--partition:1--offset:35

topic:bajie--partition:0--offset:35

topic:bajie--partition:1--offset:36

topic:bajie--partition:0--offset:36

topic:bajie--partition:1--offset:37

topic:bajie--partition:0--offset:37

topic:bajie--partition:1--offset:38

topic:bajie--partition:0--offset:38

topic:bajie--partition:1--offset:39

当 //System.out.println("消息已经发送了");注释打开后执行结果

消息已经发送了

topic:bajie--partition:0--offset:39

消息已经发送了

topic:bajie--partition:1--offset:40

消息已经发送了

topic:bajie--partition:0--offset:40

消息已经发送了

topic:bajie--partition:1--offset:41

消息已经发送了

topic:bajie--partition:0--offset:41

消息已经发送了

topic:bajie--partition:1--offset:42

消息已经发送了

topic:bajie--partition:0--offset:42

消息已经发送了

topic:bajie--partition:1--offset:43

消息已经发送了

topic:bajie--partition:0--offset:43

消息已经发送了

topic:bajie--partition:1--offset:44

参考文章:https://blog.csdn.net/weixin_48067943/article /details/108228622 blog.csdn.net/weixin_48067943 /article/details/108228557

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2023-08-03,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录